The Doctrine of Double Effect: Navigating Ethical Dilemmas in AI

As artificial intelligence (AI) systems become increasingly sophisticated and autonomous, they are faced with complex ethical dilemmas that have long been the subject of philosophical debate. One such dilemma is the **Doctrine of Double Effect (DDE)**, a principle that has been used for centuries to evaluate the moral permissibility of actions that have both positive and negative consequences.

The DDE has four main conditions that must be met for an action to be considered morally permissible:

1. The act itself must be morally good or indifferent.

2. The bad effect must not be intended.

3. The good effect must arise at least as immediately as the bad effect.

4. The good effect must outweigh the bad effect.

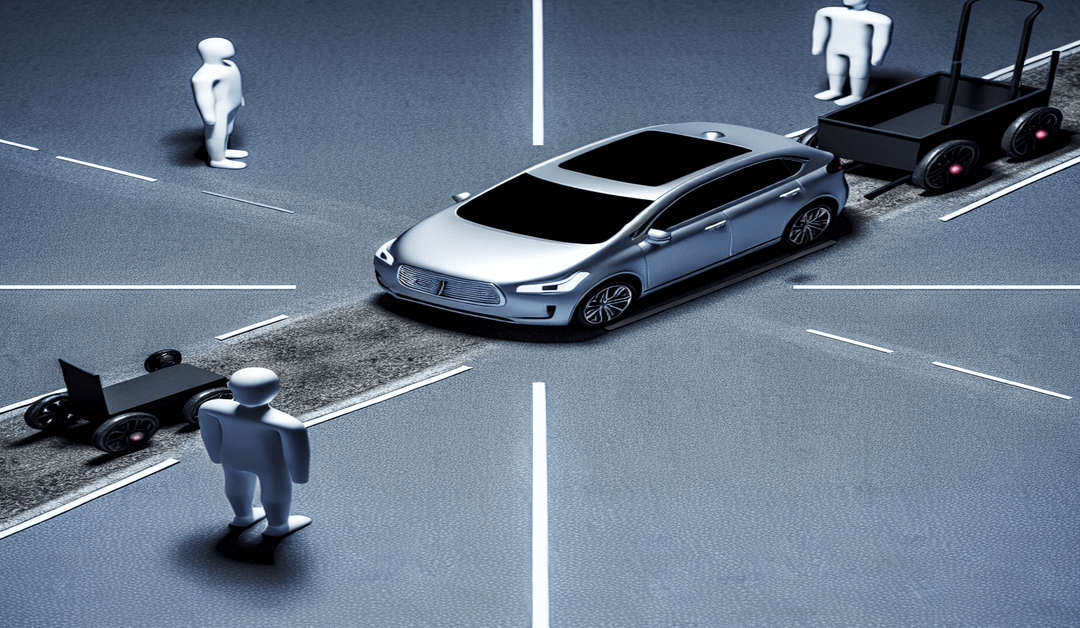

In the context of AI, the DDE becomes particularly relevant when considering scenarios like the **Trolley Problem**. This thought experiment presents a situation where a decision must be made between two undesirable outcomes, such as diverting a trolley to kill one person instead of five. Variations of the Trolley Problem, like the Fat Man scenario, highlight the distinction between direct and indirect actions, with indirect actions often perceived as more morally permissible.

The Challenges of Applying the DDE in AI

As AI systems become more autonomous, they will increasingly be faced with situations where they must make decisions that have both positive and negative consequences. For example, self-driving cars may need to choose between colliding with a pedestrian or swerving and potentially harming their passengers. In such scenarios, the DDE’s emphasis on intention and the nature of actions complicates the design of AI systems that must make split-second decisions.

Moreover, the DDE’s requirement that the good effect outweigh the bad effect raises questions about how to quantify and compare different outcomes. How do we weigh the value of human lives against other considerations like property damage or economic impact? These are not easy questions to answer, and they highlight the need for ongoing research and discussion in the field of AI ethics.

Formalizing and Automating the DDE

Despite the challenges, researchers are working on ways to formalize and automate the DDE using frameworks like first-order modal logic. By creating a clear set of rules and criteria for evaluating the moral permissibility of actions, these efforts could help in building or verifying AI systems that comply with the DDE.

However, it’s important to note that the DDE is not a perfect or universally accepted principle. Critics argue that it relies too heavily on the concept of intention, which can be difficult to define or ascertain in the context of AI systems. Others point out that the DDE does not provide clear guidance on how to weigh different outcomes or handle situations where all available options have negative consequences.

The Future of AI Ethics

As AI continues to advance and take on more complex tasks, it’s clear that we need robust frameworks for ensuring that these systems behave in ways that align with human values and ethics. The DDE is one tool in this toolkit, but it is by no means the only or the best one.

Moving forward, it will be crucial for researchers, policymakers, and industry leaders to engage in ongoing dialogue and collaboration around the ethical implications of AI. This includes not only technical challenges like formalizing the DDE, but also broader questions about the values and principles that should guide the development and deployment of AI systems.

Ultimately, the goal should be to create AI systems that are not only intelligent and efficient, but also **transparent**, **accountable**, and **beneficial** to humanity as a whole. By grappling with complex ethical questions like the DDE, we can take important steps towards this goal and ensure that the future of AI is one that we can all be proud of.

#AI #Ethics #DoubleEffect #TrolleyProblem

-> Original article and inspiration provided by Lance Eliot

-> Connect with one of our AI Strategists today at Opahl Technologies