OpenAI and Anthropic Join Forces with U.S. AI Safety Institute to Ensure Responsible AI Development

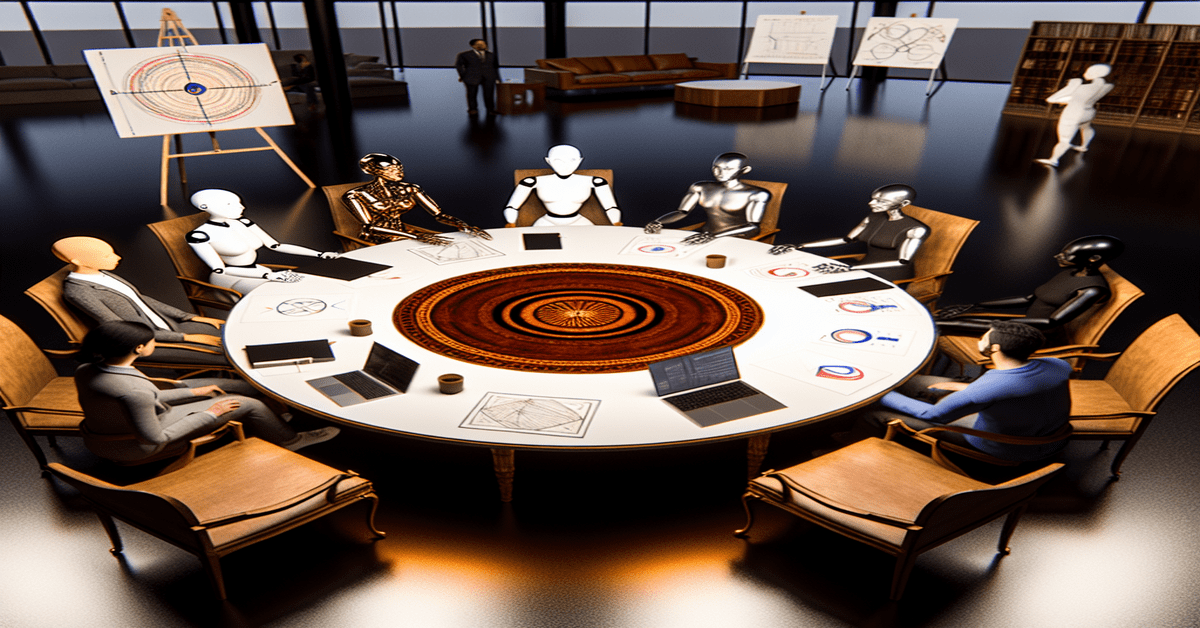

In a groundbreaking move that could shape the future of artificial intelligence, industry giants OpenAI and Anthropic have agreed to collaborate with the U.S. AI Safety Institute, a division of the National Institute of Standards and Technology (NIST), to rigorously test and evaluate their upcoming AI models for safety. This unprecedented partnership marks a significant step forward in the pursuit of responsible AI development and deployment.

The collaboration will grant the U.S. AI Safety Institute early access to major new AI models from both OpenAI and Anthropic, enabling a thorough evaluation of their capabilities and potential risks. By working closely with these leading AI companies, the institute aims to develop robust methods for mitigating any issues that may arise from the use of advanced AI systems.

Advancing the Science of AI Safety

The primary goal of this collaboration is to advance the science of AI safety, addressing the growing concerns surrounding the risks posed by increasingly sophisticated AI systems. As AI continues to evolve at a rapid pace, it is crucial to establish a framework for ensuring that these powerful technologies are developed and deployed in a manner that prioritizes safety and ethical considerations.

By providing early access to their AI models, OpenAI and Anthropic are demonstrating their commitment to transparency and accountability in AI development. This proactive approach will allow the U.S. AI Safety Institute to conduct comprehensive assessments and provide valuable feedback to the companies on potential safety improvements.

Establishing AI Safety Standards and Regulations

The collaboration between OpenAI, Anthropic, and the U.S. AI Safety Institute is part of a broader effort to establish clear standards and regulations for AI safety. This initiative aligns with the Biden-Harris administration’s Executive Order on AI, which emphasizes the importance of developing AI systems that are safe, trustworthy, and aligned with American values.

Additionally, the recent passage of the California AI safety bill SB 1047 highlights the growing recognition of the need for AI safety regulations at the state level. As more governments and organizations prioritize AI safety, collaborations like this one will play a crucial role in shaping the future of AI governance.

Implications for the AI Industry

The partnership between OpenAI, Anthropic, and the U.S. AI Safety Institute has significant implications for the AI industry as a whole. By setting a precedent for collaboration and transparency in AI safety testing, this initiative could encourage other companies to follow suit and prioritize responsible AI development.

Moreover, the insights gained from this collaboration could inform the development of industry-wide best practices and standards for AI safety. As the AI landscape continues to evolve, it is essential that companies, researchers, and policymakers work together to ensure that the benefits of AI are realized while minimizing potential risks.

Engaging the AI Community

As we witness this historic collaboration unfold, it is crucial for the AI community to actively engage in the discussion surrounding AI safety. By sharing your thoughts, experiences, and insights on this topic, you can contribute to the ongoing conversation and help shape the future of responsible AI development.

We encourage you to like, comment, and share this post to raise awareness about the importance of AI safety and the need for collaborative efforts to address the challenges posed by advanced AI systems. Together, we can work towards a future where AI technologies are developed and deployed in a manner that benefits society as a whole.

#AISafety #ResponsibleAI #CollaborationForGood

-> Original article and inspiration provided by Shirin Ghaffary

-> Connect with one of our AI Strategists today at Opahl Technologies