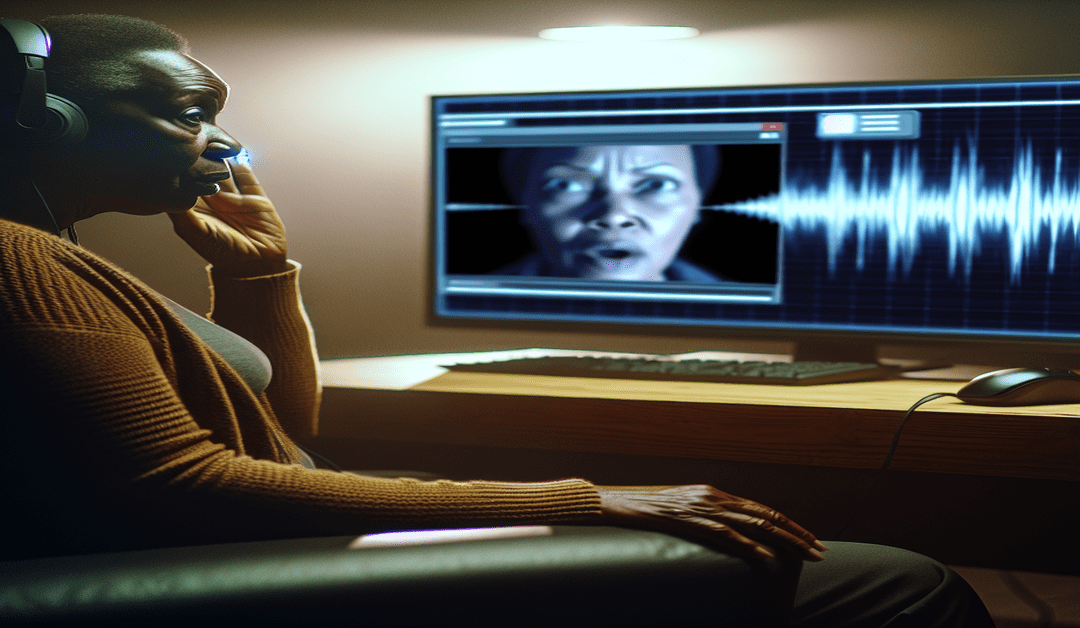

The Dangers of AI Voice Cloning: Protecting Yourself from Identity Theft and Scams

In today’s rapidly evolving technological landscape, artificial intelligence (AI) has made remarkable strides in various fields, including voice cloning. While this technology has the potential to revolutionize industries such as entertainment, education, and customer service, it also poses significant risks when misused by criminals. Consumer Reports recently published an article highlighting the ease with which scammers can use AI voice cloning apps to impersonate individuals, leading to identity theft and financial fraud[1].

The Alarming Accessibility of Voice Cloning Technology

One of the most concerning aspects of AI voice cloning is the minimal amount of audio required to create a highly realistic voice clone. Consumer Reports found that some apps only need a few seconds of recorded speech, which can be easily obtained from public platforms like YouTube or social media[1][2][3]. This means that **virtually anyone’s voice can be cloned without their knowledge or consent**, making it a powerful tool in the hands of criminals.

Moreover, the majority of voice cloning tools lack robust safeguards to prevent unauthorized cloning. In their investigation, Consumer Reports discovered that only two apps, Descript and Resemble AI, had implemented additional measures to ensure consent[4]. This lack of industry-wide security standards leaves individuals vulnerable to voice cloning attacks.

The Art of Deception: How Scammers Employ Cloned Voices

Scammers employ various tactics to trick victims into believing they are communicating with a trusted individual. By using a cloned voice, they can create a sense of urgency or emergency, pressuring the victim to send money or reveal sensitive personal information[1][2][5]. These scams can take many forms, from impersonating a family member in need to posing as a company executive requesting a financial transfer.

The **prevalence of voice cloning scams is alarming**, with a significant number of people having experienced or knowing someone who has fallen victim to such attacks[3][5]. The financial and emotional toll of these scams can be devastating, emphasizing the need for increased awareness and protection measures.

Combating Voice Cloning Scams: Recommendations and Future Outlook

To combat the misuse of voice cloning technology, Consumer Reports suggests implementing stricter verification processes for app users, such as requiring credit card information for account creation[4]. Additionally, app developers must ensure that users fully understand the legal implications of misusing voice cloning technology and provide clear guidelines for obtaining consent from individuals whose voices are being cloned.

As AI continues to advance, the **combination of voice cloning with other tools like deepfakes and language models like ChatGPT can create even more convincing scams**[2][3]. This highlights the importance of staying informed about the latest technological developments and their potential risks.

Empowering Yourself Against Voice Cloning Scams

While the rise of voice cloning scams may seem daunting, there are steps you can take to protect yourself and your loved ones:

1. Be cautious when sharing audio or video content online, as it can be used to create voice clones without your consent.

2. If you receive an unexpected request for money or personal information, verify the identity of the person through a trusted channel before taking any action.

3. Educate yourself and others about the risks of voice cloning scams and the tactics employed by criminals.

4. Support initiatives that promote responsible AI development and advocate for stronger security measures in voice cloning apps.

By staying vigilant and proactive, we can work together to mitigate the risks posed by AI voice cloning and ensure that this technology is used for the benefit of society, not as a tool for deception and fraud.

#VoiceCloning #IdentityTheft #AIScams #CyberSecurity #ConsumerProtection

-> Original article and inspiration provided by Consumer Reports

-> Connect with one of our AI Strategists today at Opahl Technologies