The Promise and Pitfalls of AI in Self-Diagnosis: A Balanced Perspective

In the rapidly evolving landscape of healthcare, Artificial Intelligence (AI) has emerged as a potential game-changer, promising to revolutionize the way we approach medical diagnosis. One of the most intriguing applications of AI in this field is its potential for enabling self-diagnosis. The idea of using AI-powered tools to identify health issues without the need for an initial consultation with a healthcare professional is both exciting and controversial. In this blog post, we will explore the potential benefits, current capabilities, limitations, and ethical considerations surrounding the use of AI in self-diagnosis.

Potential Benefits of AI in Self-Diagnosis

One of the most significant advantages of using AI for self-diagnosis is its potential to improve **accessibility** to healthcare. In areas with limited medical resources or for individuals who face barriers to accessing healthcare services, AI-powered tools could provide a first line of defense in identifying potential health issues. Additionally, AI systems can process vast amounts of data quickly, potentially leading to **faster diagnoses** and more **efficient** healthcare delivery.

Another potential benefit of AI in self-diagnosis is its ability to provide **personalized** diagnostic suggestions. By analyzing an individual’s unique health data, including medical history, lifestyle factors, and genetic information, AI algorithms could generate tailored recommendations, paving the way for more targeted and effective interventions.

Current Capabilities of AI in Self-Diagnosis

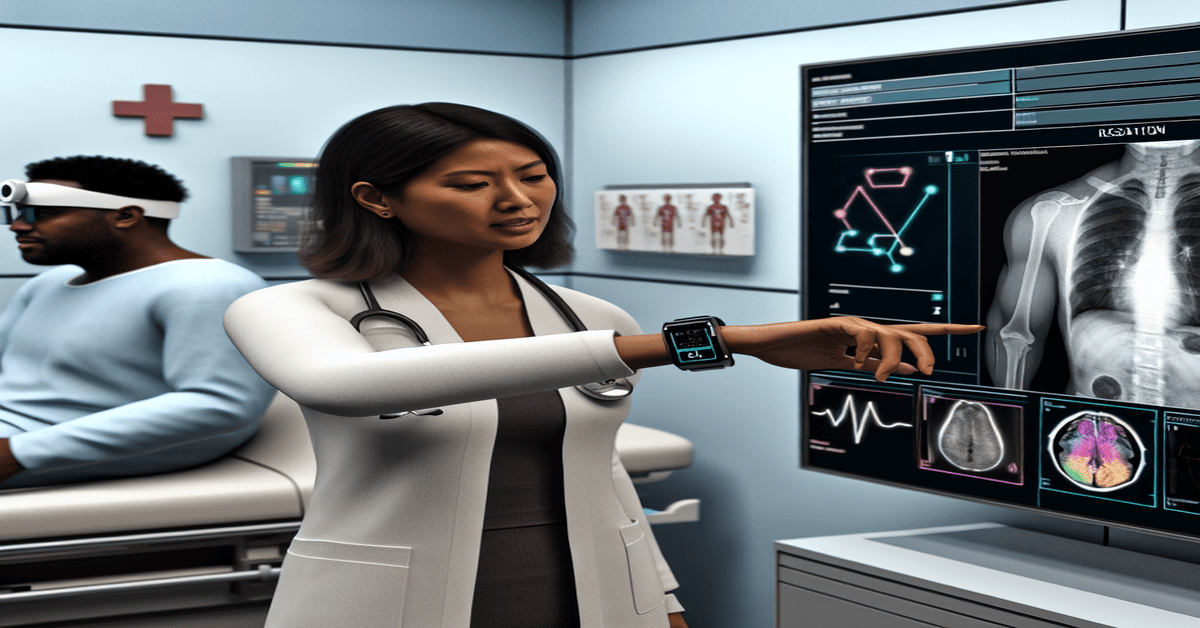

AI-driven symptom checkers are already available to consumers, allowing users to input their symptoms and receive a list of possible conditions. While these tools are not yet perfect, they can serve as a useful starting point for individuals seeking to understand their health concerns better. In addition to symptom checkers, AI is being used in medical imaging to help diagnose conditions such as cancer, diabetic retinopathy, and cardiovascular diseases. Wearable devices integrated with AI can also monitor vital signs and detect anomalies that may indicate underlying health issues.

Limitations and Challenges of AI in Self-Diagnosis

Despite the promising potential of AI in self-diagnosis, there are significant limitations and challenges that must be addressed. One of the primary concerns is the **accuracy** and **reliability** of AI-generated diagnoses. While AI has made remarkable progress in recent years, it is not infallible. There is a risk of misdiagnosis or overlooking critical symptoms, which could lead to delayed treatment or inappropriate interventions.

Another challenge is the quality of the data used to train AI algorithms. The accuracy of AI diagnoses depends heavily on the quality and representativeness of the data used to develop the models. Poor data quality or biased datasets can lead to inaccurate or skewed results, potentially exacerbating health disparities.

Regulatory Frameworks and Patient Education

As AI-based diagnostic tools become more prevalent, there is a pressing need for clear **regulatory frameworks** to ensure that these tools meet rigorous safety and efficacy standards. Regulators must strike a balance between fostering innovation and protecting patient safety. Additionally, patients need to be educated about how to use these tools correctly and understand their limitations. Overreliance on AI-generated diagnoses without proper context or medical oversight could lead to unintended consequences.

Ethical Considerations in AI-Powered Self-Diagnosis

The use of AI in self-diagnosis raises significant ethical questions that must be carefully considered. One of the most pressing concerns is the protection of **patient privacy**. As AI tools rely on the collection and analysis of personal health data, robust data protection policies and secure infrastructure are essential to safeguard sensitive information.

Another ethical challenge is the question of **liability**. If an AI system provides an incorrect diagnosis that leads to adverse outcomes, who is held responsible? Is it the developers of the AI tool, the healthcare providers who rely on its recommendations, or the patients themselves? Clear guidelines and legal frameworks are needed to address these complex issues.

Future Outlook and Integration with Healthcare Systems

For AI to reach its full potential in self-diagnosis, it needs to be seamlessly integrated into existing healthcare systems. This requires close collaboration between AI developers, healthcare providers, and policymakers to ensure that these tools are developed and deployed in a manner that aligns with the goals and values of the healthcare system.

It is also important to recognize that AI is not intended to replace human healthcare professionals but rather to augment their capabilities. Human oversight and validation of AI-generated diagnoses will remain crucial to ensure the safety and effectiveness of these tools.

As we navigate the complex landscape of AI in self-diagnosis, it is essential to approach this technology with a balanced perspective. While the potential benefits are significant, we must also be mindful of the limitations, challenges, and ethical considerations involved. By fostering open dialogue, collaborative research, and responsible innovation, we can harness the power of AI to improve healthcare access and outcomes while prioritizing patient safety and well-being.

#AIHealthcare #SelfDiagnosis #FutureOfMedicine

-> Original article and inspiration provided by Medical Buyer

-> Connect with one of our AI Strategists today at Opahl Technologies