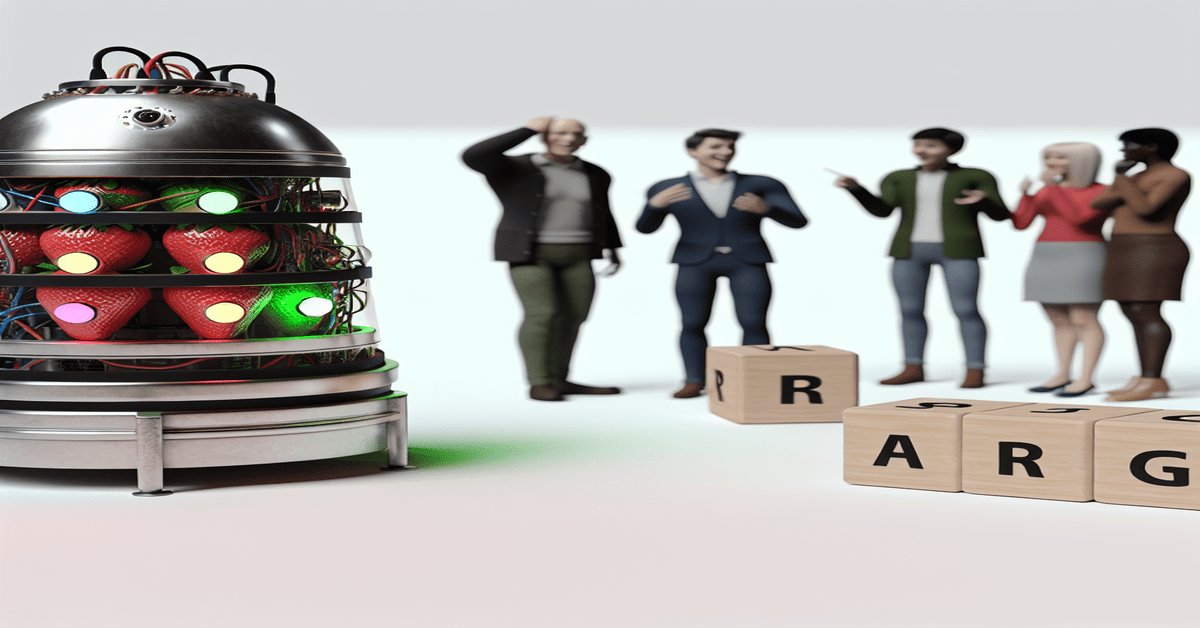

The Great AI Strawberry Conundrum: Exposing the Quirks and Limitations of Language Models

In the rapidly evolving world of artificial intelligence, we often find ourselves marveling at the incredible feats achieved by cutting-edge language models. From engaging in witty conversations to generating coherent articles, these AI systems have demonstrated remarkable capabilities. However, a recent incident involving a seemingly simple question about the word “strawberry” has left many scratching their heads and questioning the true extent of AI’s understanding.

The Bizarre Strawberry Incident

The Daily Star recently reported on a peculiar occurrence that took the AI community by storm. When asked the straightforward question, “How many ‘Rs’ are in the word ‘strawberry’?”, a majority of AI models, including those developed by industry giants like OpenAI, consistently provided the incorrect answer of two. The correct answer, as any human would easily point out, is three.

This incident quickly gained traction on social media, with users expressing a mix of amusement and skepticism. Many found it baffling that advanced AI systems, capable of tackling complex tasks, could stumble on such a basic question. The widespread ridicule and criticism highlighted the growing concerns about the limitations and quirks of AI models.

Understanding the Root of the Problem

To comprehend why AI models struggled with this seemingly trivial question, it’s essential to delve into the intricacies of how they process text. Unlike humans, who naturally read and interpret words letter by letter, AI models break down text into smaller units called tokens. These tokens often represent subwords or even individual characters.

When an AI model encounters the word “strawberry,” it likely breaks it down into tokens such as “straw,” “berr,” and “y.” This tokenization process allows the model to efficiently handle large volumes of text and capture patterns and relationships between words. However, it can also lead to blind spots when it comes to counting specific characters within a word.

The Implications for AI Development

The strawberry incident may seem like a minor glitch, but it underscores some significant challenges in AI development. It raises questions about the **robustness** and **reliability** of language models, especially when it comes to handling edge cases or unconventional queries.

Moreover, it highlights the need for AI systems to possess a deeper understanding of language beyond superficial pattern recognition. While models can generate fluent and contextually relevant responses, incidents like this suggest that their grasp of fundamental language concepts may be limited.

As the AI industry continues to evolve, it is crucial for researchers and developers to address these limitations head-on. By focusing on techniques such as **few-shot learning**, **transfer learning**, and **adversarial training**, AI models can be trained to handle a wider range of scenarios and develop a more nuanced understanding of language.

Balancing Expectations and Reality

The strawberry incident serves as a reminder that, despite the impressive strides made in AI, we must approach these systems with a balanced perspective. While AI models excel at certain tasks, they are not infallible and can exhibit surprising quirks and limitations.

As businesses and individuals increasingly rely on AI for various applications, it is essential to maintain a critical eye and not blindly trust the outputs of these models. **Human oversight** and **validation** remain crucial in ensuring the accuracy and reliability of AI-generated content.

Embracing the Journey of AI Development

The AI strawberry conundrum may have elicited a few chuckles and raised some eyebrows, but it also opens up valuable discussions about the current state and future direction of AI development. It reminds us that the journey towards truly intelligent and comprehensive AI systems is an ongoing process, filled with challenges and opportunities for growth.

As an industry, we must embrace these moments of humility and use them as catalysts for innovation and improvement. By acknowledging and addressing the limitations of AI models, we can pave the way for more robust, reliable, and trustworthy systems that can better navigate the complexities of human language and reasoning.

In conclusion, the AI strawberry incident may seem like a minor hiccup in the grand scheme of things, but it serves as a valuable lesson for the AI community. It highlights the need for continued research, development, and collaboration to push the boundaries of what AI can achieve while maintaining a grounded perspective on its current capabilities.

As we move forward, let us embrace the challenges, learn from the quirks, and work towards building AI systems that not only impress us with their outputs but also demonstrate a deeper understanding of the nuances and intricacies of human language. Together, we can shape a future where AI and human intelligence complement each other, leading to unprecedented advancements and solutions to the complex problems we face.

#ArtificialIntelligence #LanguageModels #AILimitations #StrawberryConundrum

-> Original article and inspiration provided by Daily Star

-> Connect with one of our AI Strategists today at Opahl Technologies